Using Multi-LLM Systems for Investigations and Ediscovery: Fast, Flexible and Cost-Effective

By John Tredennick and Dr. William Webber

The legal industry is witnessing a revolution with the adoption of AI in its core functions. There are hundreds, if not thousands, of new software applications using Generative AI. Most use OpenAI’s latest large language model (LLM), GPT 4.0, seemingly locked into one vendor and one model.

We chose a different approach for DiscoveryPartner, our AI-powered ediscovery platform. DiscoveryPartner breaks from this trend, offering an AI-powered ediscovery platform that accesses GenAI products from multiple vendors. Why do this? Because it affords the flexibility to use the best, most cost-effective LLM for each task required.

How Does a Multi-LLM System Work?

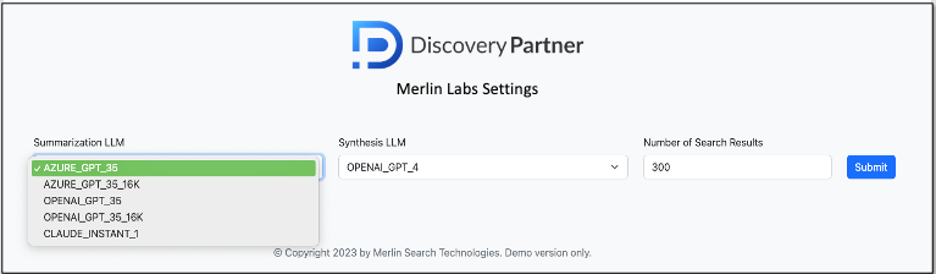

DiscoveryPartner uses different LLMs for document analysis, including OpenAI, Microsoft, and Anthropic. Supporting two key functions for document analysis: summarization and synthesis, it illustrates a new paradigm in legal investigations.

Document Summarization

Once relevant documents are identified, the user can direct the system to summarize based on a topic description. Specifically, you tell the LLM about the nature of your investigation and the kind of information you want to review. You might also ask it to identify people and dates mentioned in the document, particularly as it relates to your topic.

DiscoveryPartner offers these options for document summarization:

We have found that OpenAI version 3.5 “turbo”, with both the standard (4k) and 16k window sizes, are quick, efficient and provide document summary content that is roughly equivalent to the output from GPT 4. In fact, GPT 3.5 is faster at producing summaries and costs roughly 1/50th of GPT 4. For that reason we recommend using the lesser version for this function, particularly when you are summarizing 300 or more documents.

We also offer Anthropic’s Claude Instant (100K) LLM. This has less horsepower than GPT 4 or Claude 2 but it is comparable to GPT 3.5 and has a much larger context window (100K tokens vs. 3K or 16K with GPT). That makes it perfect for analyzing larger documents that won’t fit in a smaller context window. We recommend using Claude Instant to summarize larger documents including transcripts and text messaging (which we can ingest in whole).

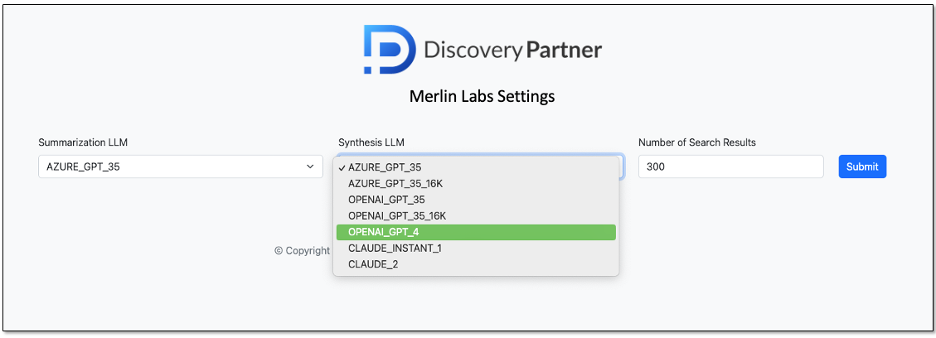

Synthesis/Reporting

The second step is to synthesize and report on information across the documents selected for summarization. This typically involves a high-level analysis of the documents tailored to your information need and more complex reporting requests.

For synthesizing and reporting across multiple documents, we recommend top-of-the-line LLMs like GPT 4 and Claude 2, which excel in providing insightful reports on legal issues, positions, people involved, and timelines. Both cost more than their alternative versions and are a bit slower at responding to requests but the output is worth putting up with both issues.

Thus, at any given time, we might suggest Claude Instant 100K for summarizing a set of documents and GPT 4 to synthesize and report across them. A user can also switch from one LLM to another, e.g. from Claude 2 to GPT 4, to elicit two different perspectives for a report.

Why Build a Multi-LLM Framework?

A multi-LLM provides our clients with the flexibility to use different LLM models for different purposes. And it also allows them to use faster and cheaper models for routine work, while reserving the most sophisticated (and expensive) models for synthesis, analysis and reports. It’s faster, more flexible and more cost effective than relying on a single model from a single vendor.

Adding New LLMs

There is one more important advantage to our approach. As new even more powerful LLMs hit the market, we can integrate them into our platform. We connect to commercial versions of LLMs like GPT and Claude through an API, Application Programming Interface, which provides the flexibility we need to connect and take advantage of different LLMs.

Hardly a week goes by without an announcement of a new “more powerful” large language model that will knock the current AI leaders off their pedestals. As these new LLMs continue to emerge, our adaptable framework ensures that we remain at the forefront of technology, offering our clients an agile, powerful, and economically wise tool that elevates their practice in an increasingly competitive and technologically-driven environment.

Fast, flexible and cost effective. That’s our mantra.

About the Authors

John Tredennick (JT@Merlin.Tech) is the CEO and founder of Merlin Search Technologies, a software company leveraging generative AI and cloud technologies to make investigation and discovery workflow faster, easier and less expensive. Prior to founding Merlin, Tredennick had a distinguished career as a trial lawyer and litigation partner at a national law firm.

With his expertise in legal technology, he founded Catalyst in 2000, an international ediscovery technology company that was acquired in 2019 by a large public company. Tredennick regularly speaks and writes on legal technology and AI topics, and has authored eight books and dozens of articles. He has also served as Chair of the ABA’s Law Practice Management Section.

Dr. William Webber (wwebber@Merlin.Tech) is the Chief Data Scientist of Merlin Search Technologies. He completed his PhD in Measurement in Information Retrieval Evaluation at the University of Melbourne under Professors Alistair Moffat and Justin Zobel, and his post-doctoral research at the E-Discovery Lab of the University of Maryland under Professor Doug Oard.

With over 30 peer-reviewed scientific publications in the areas of information retrieval, statistical evaluation, and machine learning, he is a world expert in AI and statistical measurement for information retrieval and ediscovery. He has almost a decade of industry experience as a consulting data scientist to ediscovery software vendors, service providers, and law firms.

About Merlin Search Technologies

Merlin is a pioneering cloud technology company leveraging generative AI and cloud technologies to re-engineer legal investigation and discovery workflows. Our next-generation platform integrates GenAI and machine learning to make the process faster, easier and less expensive. We’ve also introduced Cloud Utility Pricing, an innovative software hosting model that charges by the hour instead of by the month, saving clients substantial savings on discovery costs when they turn off their sites.

With over twenty years of experience, our team has built and hosted discovery platforms for many of the largest corporations and law firms in the world. Learn more at merlin.tech.

John Tredennick, CEO and founder of Merlin Search Technologies

JT@Merlin.Tech

Dr. William Webber, Merlin Chief Data Scientist

WWebber@Merlin.Tech

Transforming Discovery with GenAI

Take a look at our research and generative AI platform integration work on our GenAI page.

Subscribe

Get the latest news and insights delivered straight to your inbox!