How Much Do Large Language Models Like GPT Cost?

Using the right LLM for the job will save you money!

By John Tredennick, Dr. William Webber and Lydia Zhigmitova

We recently wrote an article about Using Multi-LLM Systems for Investigations and Ediscovery: Fast, Flexible and Cost-Effective. One of the reasons we chose this path is because different large language models, e.g. GPT and Claude are better for different purposes. As we pointed out, using different LLMs for different jobs “affords the flexibility to use the best, most cost-effective LLM for each task required.”

In this article we focus on cost effectiveness. Why? Because pricing can make a difference when two tools can do the same job equally well. If one of the LLMs costs fifty times more than the other, you might want to pay attention. As we will show you, that is sometimes the case.

How Do Software Companies Charge for Large Language Models?

Many of us started using ChatGPT when it was free. Some of us moved to the $20 a month version to secure faster responses and access to later models but daily volumes were still limited. These licenses aren’t suitable for commercial purposes and are not our focus here.

For legal systems, we need to move to a commercial license and access GPT, the underlying engine for ChatGPT, through an API (application programming interface).

Pricing under these types of licenses is based on two considerations:

- The model being used, e.g. GPT 3.5 versus GPT 4.

- The volume of prompt tokens sent to or received from the LLM.

Before we get to specific prices for different models, let’s talk a bit about tokens. They are central to the analysis of which models are best suited for different purposes.

About Tokens

Tokens are the basic units of text or code that an LLM uses to process and generate language. Tokens can be characters, words, subwords or other segments of text or code. A token count of 1,000 typically equates to about 750 English words.

How many tokens in a prompt? This obviously depends on the length of information you send to the LLM. For our purposes, however, prompt lengths can be long because we typically send the text of ediscovery documents (or summaries of that text) to the LLM along with our request or question.

Why send the text? Because, for ediscovery at least, we typically ask the LLM to base its answer on the text of one or more discovery documents (email, documents, transcripts, etc.). As a result, the volume of text transmitted to the LLM can be much larger than the response.

For pricing purposes, we have found that the average volume of prompts is roughly ten times larger than the average volume of responses. Put another way, we typically send 1,000 tokens to the system for every 100 tokens received in response.

Why does this matter? Because most vendors charge one price for the volume of tokens submitted in the prompt (input) and another, larger price for the volume of tokens returned by the LLM in its answer (output).

Commercial Pricing

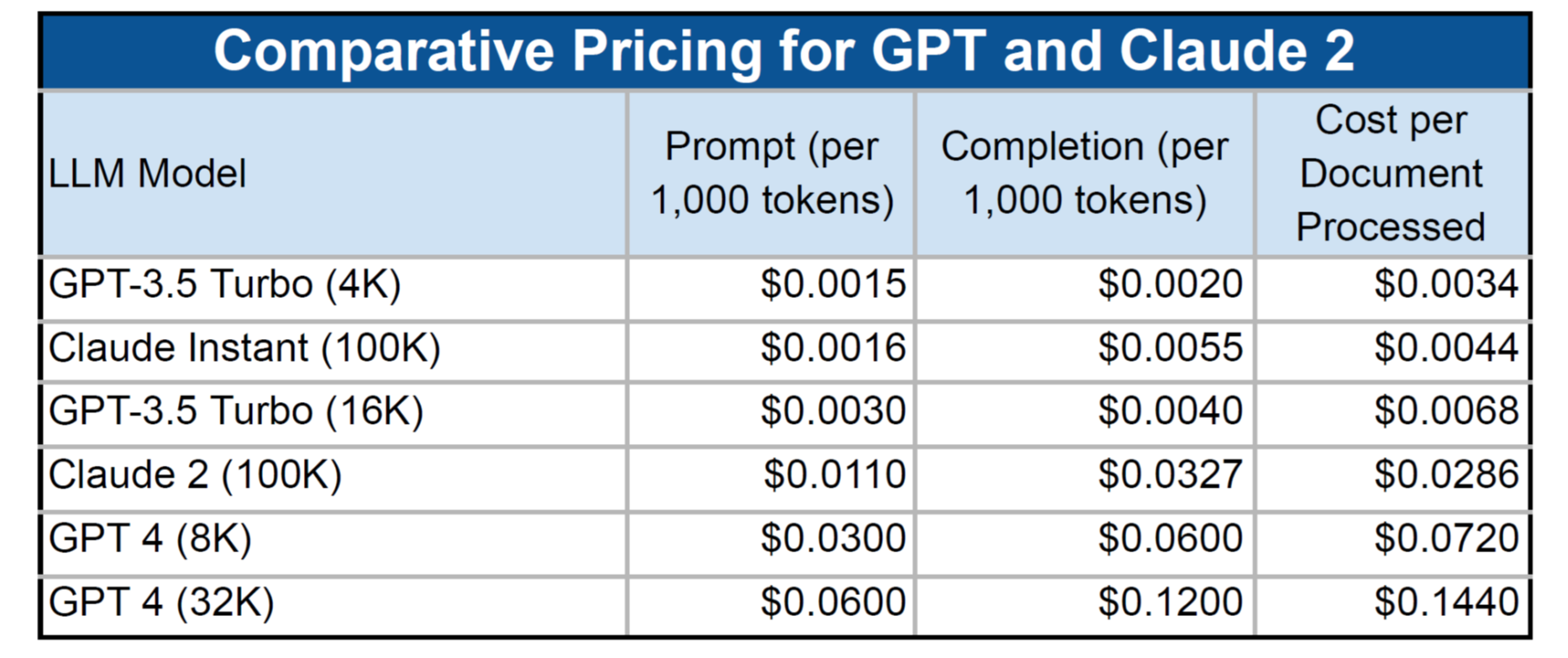

Here is a chart showing current pricing for two different LLMs: GPT (from OpenAI and Microsoft) and Claude 2 (from Anthropic)1. Both GPT and Claude come in different models, with pricing matching their capabilities2. Microsoft and OpenAI offer the same prices so they are grouped together.

1 There are other vendors on the market but these represent the two LLMs we currently use. You can apply the same analysis to different LLMs you may be considering.

2 GPT pricing is per 1,000 tokens. Anthropic prices per million tokens. For comparative purposes, we normalized pricing using 1,000 tokens for each.

The references in parentheses represent the size of the context window for each model. In essence, the context window limits how large the prompt can be for each request. The higher the prompt limit, the better, but costs increase as well. You can read more about context windows here: Are LLMs Like GPT Secure?.

Prices per 1,000 tokens are relatively small. To put things in perspective, we estimated the cost to send and return information for a single document that is 2,000 tokens in length (about 1,500 words).

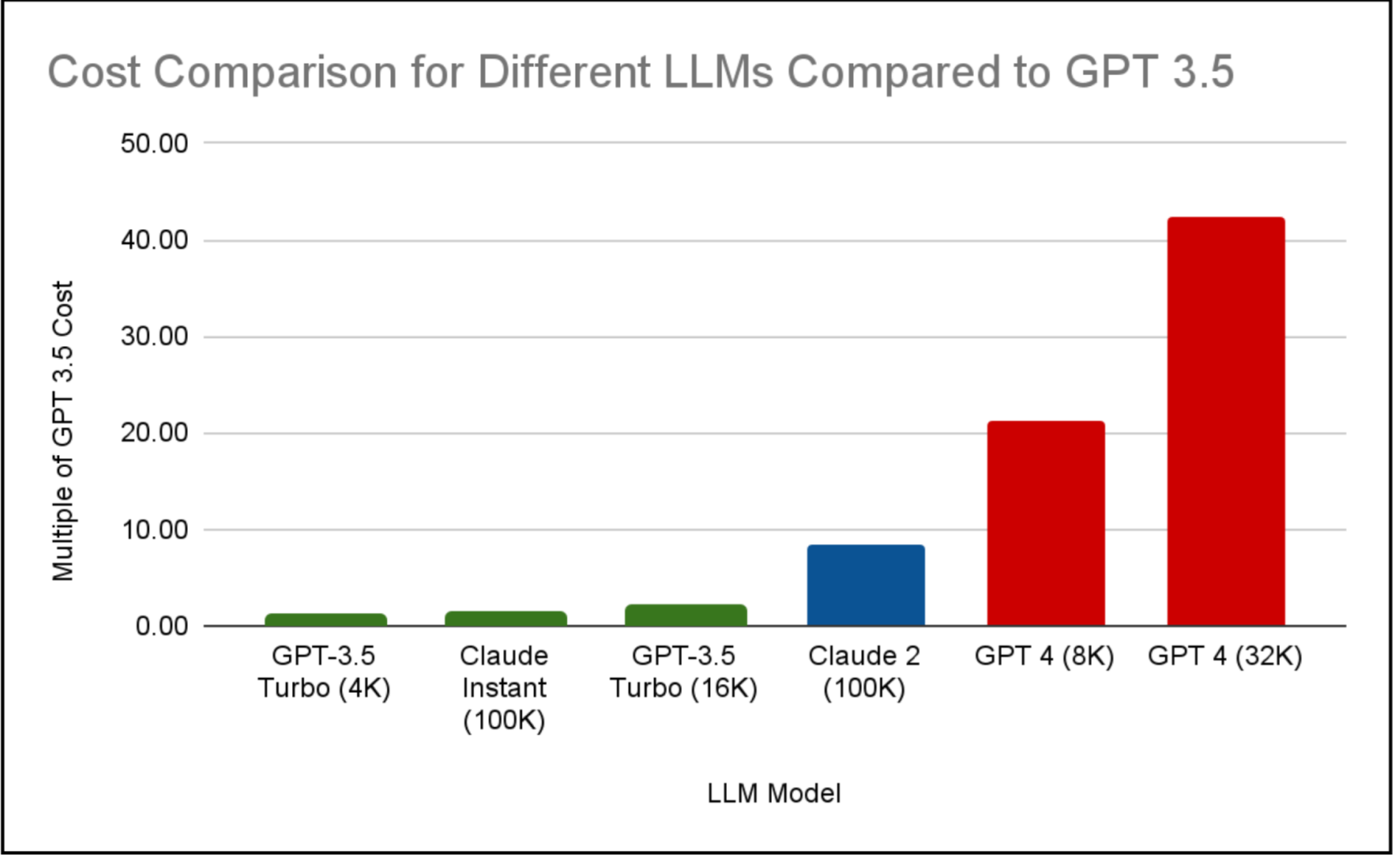

You can quickly see that the cost to use GPT 4 is much greater than for lesser models like GPT 3.5. This chart shows relative pricing as a multiple of the cheapest model: GPT 3.5 (4K):

GPT 4 (32K) costs over 40 times more than GPT 3.5. GPT 4 (8K) costs more than 20 times GPT 3.5. Do we need the power of GPT 4 for every task we might run?

That is the question we will address in the next section.

Getting Smart About How We Use LLMs

We chose to build a multi-LLM framework because we have found that lower-priced LLM models can do certain tasks as well as the higher-priced version and often do them much more quickly. For example, we typically summarize documents based on a topic request and submit the summary to the LLM for analysis rather than the entire document. This lets us submit more summaries to the LLM for analysis at one time than would be possible if we submitted entire documents.

For summaries, we often recommend using GPT 3.5 (4K) or GPT 3.5 (16K). We then send the summaries to GPT 4 (8K or 32K) for more refined analysis. The same logic applies to using Claude Instant (100K) for document summaries and Claude 2 (100K) for reports. GPT 3.5 and Claude Instant both do an excellent job at summarizing documents; our testing doesn’t show a measurable improvement with the more expensive models. However, we prefer the stronger models, GPT 4 and Claude 2 for final reports and recommendations.

With a multi-LLM framework, our clients can mix and match LLMs and individual models to get the most cost-effective results.

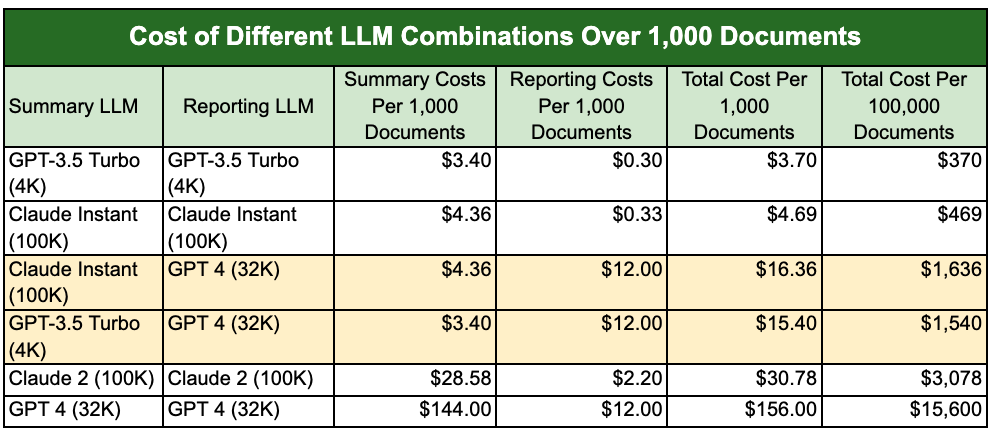

Here is a slightly more complicated chart showing how this approach can save on LLM costs using different combinations of LLMs and models.

You can quickly see how the costs vary between different combinations of LLMs and LLM models. In this case, we have highlighted two combinations that we think represent smart choices. We urge clients to consider GPT 3.5 or Claude Instant for summary work. Why? Because they are much faster than their more powerful siblings and less costly. And, the summaries don’t show the kind of improvement that justify the added costs in most cases. Where a client wants the additional horsepower provided by GPT 4 or Claude 2, it is easy to change the setting.

Why consider Claude Instant or Claude 2 in this mix? One simple reason is the larger context window. Claude 2 offers a three-times large context window which can be important for large documents. As an example, we can fit most transcripts into Claude Instant or Claude 2. They would have to be broken up to fit GPT’s smaller context window. This can make the difference in many cases. (We are thinking about checking text sizes before processing to automatically send larger documents to Claude 2 even if we use GPT for their analysis.)

Ultimately, the smart play depends on your matter and your documents. The one thing we can say for sure is that having a multi-LLM framework affords the flexibility to use the best, most cost-effective LLM for each task required. That is why we chose a different path than other vendors.

About the Authors

John Tredennick (JT@Merlin.Tech) is the CEO and founder of Merlin Search Technologies, a software company leveraging generative AI and cloud technologies to make investigation and discovery workflow faster, easier and less expensive. Prior to founding Merlin, Tredennick had a distinguished career as a trial lawyer and litigation partner at a national law firm.

With his expertise in legal technology, he founded Catalyst in 2000, an international ediscovery technology company that was acquired in 2019 by a large public company. Tredennick regularly speaks and writes on legal technology and AI topics, and has authored eight books and dozens of articles. He has also served as Chair of the ABA’s Law Practice Management Section. Tredennick is based in Denver, Colorado.

Dr. William Webber (wwebber@Merlin.Tech) is the Chief Data Scientist of Merlin Search Technologies. He completed his PhD in Measurement in Information Retrieval Evaluation at the University of Melbourne under Professors Alistair Moffat and Justin Zobel, and his post-doctoral research at the E-Discovery Lab of the University of Maryland under Professor Doug Oard.

With over 30 peer-reviewed scientific publications in the areas of information retrieval, statistical evaluation, and machine learning, Dr. Webber is a world expert in AI and statistical measurement for information retrieval and ediscovery. He has a decade of industry experience as a consulting data scientist to ediscovery software vendors, service providers, and law firms. Dr. Webber is based in Melbourne, Australia.

Lydia Zhigmitova (LZhigmitova@Merlin.Tech) is Senior Prompt Engineer at Merlin Search Technologies. She received her Masters in Philology from the Pushkin State Russian Language Institute, Moscow. Her thesis title was “Metaphors in Scientific Communication and Their Role in Teaching Russian as a Foreign Language” (Роль метафоры в научном лингвистическом тексте и её место на занятиях по РКИ).

Ms. Zhigmitova has a decade of experience in applied linguistics and digital content management. She works with Merlin data scientists and product engineers to develop prompt templates for our new GenAI platform, Discovery Partner™. She also assists Merlin clients in best practices for effective prompts and helps develop Merlin’s AI-driven content generation and analysis toolkit. Ms. Zhigmitova is based in Ulaanbaatar, Mongolia.

About Merlin Search Technologies

Merlin is a pioneering cloud technology company leveraging generative AI and cloud technologies to re-engineer legal investigation and discovery workflows. Our next generation platform integrates GenAI and machine learning to make the process faster, easier and less expensive. We’ve also introduced Cloud Utility Pricing, an innovative software hosting model that charges by the hour instead of by the month, saving clients substantial savings on discovery costs when they turn off their sites.

With over twenty years of experience, our team has built and hosted discovery platforms for many of the largest corporations and law firms in the world. Learn more at merlin.tech.

John Tredennick, CEO and founder of Merlin Search Technologies

JT@Merlin.Tech

Dr. William Webber, Merlin Chief Data Scientist

WWebber@Merlin.Tech

Transforming Discovery with GenAI

Take a look at our research and generative AI platform integration work on our GenAI page.

Subscribe

Get the latest news and insights delivered straight to your inbox!